Artificial intelligence (AI) has become a ubiquitous term, woven into the fabric of our daily lives. From facial recognition on smartphones to chatbots providing customer service, AI is silently shaping our world. At the heart of this revolution lie neural networks, complex algorithms inspired by the human brain. But how do these networks learn, and what are the different architectures that power their capabilities?

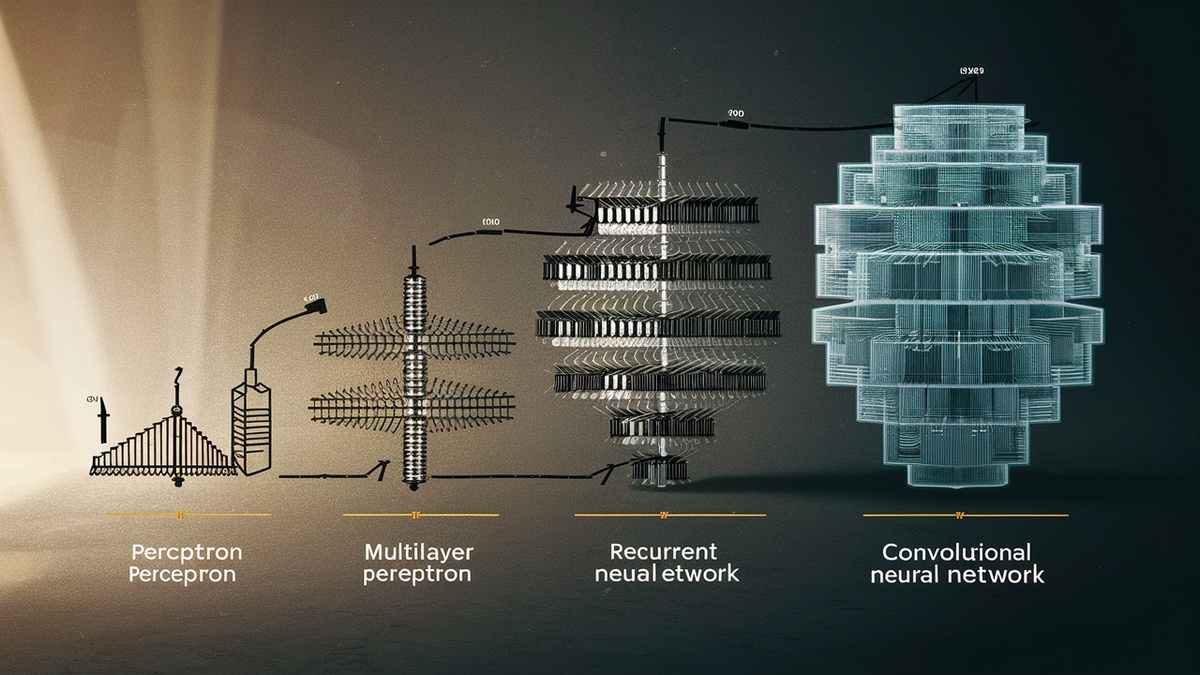

This blog post embarks on a journey through the fascinating world of neural network architectures, tracing their evolution from the humble beginnings of perceptrons to the powerful convolutional networks that drive image recognition today.

The Birth of an Idea: The Perceptron

The story begins in the 1940s with the perceptron, introduced by Frank Rosenblatt. This simple model, inspired by biological neurons, aimed to mimic basic learning capabilities. A perceptron receives multiple inputs, assigns weights to each input, and sums them. If the weighted sum exceeds a certain threshold, the perceptron fires, outputting a value, typically 1 or 0.

While seemingly rudimentary, the perceptron laid the foundation for future advancements. It introduced the concept of learning through adjusting weights. By modifying these weights based on training data and desired outputs, the perceptron could learn to classify simple patterns. However, a major limitation emerged: perceptrons could only handle linearly separable problems. Imagine separating data points on a graph using a straight line. Perceptrons can do this effectively, but for more complex, non-linear patterns, they fall short.

Overcoming Limitations: Multilayer Perceptrons and Backpropagation

This limitation sparked the development of multilayer perceptrons (MLPs) in the 1980s. MLPs stack multiple layers of perceptrons, creating a network that can learn more complex relationships between inputs and outputs. Each layer learns its own set of weights, allowing the network to progressively extract features and build upon them in subsequent layers.

However, training these deeper networks presented a challenge. How do we efficiently adjust the weights across multiple layers to minimize errors and achieve the desired output? This is where the concept of backpropagation emerged. Backpropagation is a clever algorithm that propagates the error signal backwards through the network, allowing adjustments to be made to the weights of all layers, effectively guiding the network towards better performance.

MLPs with backpropagation ignited a revolution in neural networks, demonstrating their potential for tackling complex problems. However, specific architectures were needed to address the unique challenges of different data types.

Unveiling Convolutional Neural Networks (CNNs): The Power of Images

One major challenge involved image recognition. Images are inherently spatial, with pixels arranged in a grid. Standard MLPs treat each pixel as an independent input, ignoring the spatial relationships between pixels. This is where convolutional neural networks (CNNs) come into play.

Developed in the 1980s and popularized in the late 2000s, CNNs are specifically designed for image recognition. They introduce the concept of convolutional layers. These layers use filters that slide across the image, extracting features like edges, shapes, and textures. By stacking multiple convolutional layers, CNNs can progressively learn increasingly complex features from the image, ultimately leading to object recognition capabilities.

CNNs revolutionized computer vision. Their ability to automatically learn features from images has led to breakthroughs in areas like facial recognition, medical image analysis, and self-driving cars.

Beyond Images: Recurrent Neural Networks (RNNs) for Sequential Data

While CNNs excel at images, another type of neural network architecture emerged to handle sequential data like text and speech. Recurrent neural networks (RNNs) are designed to process information that unfolds over time.

An RNN can be thought of as a loop. It takes input, processes it, and stores some information in its internal state. When the next element in the sequence is presented, the RNN considers both the new input and the information retained from the previous step. This allows RNNs to learn patterns and relationships within sequences, making them ideal for tasks like language translation, sentiment analysis, and text generation.

Real-World Applications: A Symphony of Architectures in Action

The diverse architectures of neural networks have found applications across numerous domains, transforming various aspects of our lives. Here are some real-world examples showcasing the power of different architectures:

-

Convolutional Neural Networks (CNNs):

- Image Recognition: CNNs are the driving force behind image recognition breakthroughs. They power features like facial recognition on smartphones, content moderation on social media platforms, and self-driving car technology, where CNNs analyze visual data to identify objects and navigate the environment safely.

- Medical Image Analysis: CNNs are used to analyze medical images like X-rays, CT scans, and mammograms. By identifying patterns and anomalies, CNNs can assist medical professionals in early disease detection and diagnosis.

-

Recurrent Neural Networks (RNNs):

- Machine Translation: RNNs are a cornerstone of machine translation tools. They can analyze the structure and sequence of words in one language and generate grammatically correct translations in another language.

- Chatbots: RNNs enable chatbots to understand the context of conversations, respond to user queries in a natural language, and even generate creative text formats like poems or code.

- Speech Recognition: RNNs are used in speech recognition systems, enabling devices to convert spoken language into text. This technology powers features like voice assistants on smartphones and smart speakers.

These are just a few examples, and the applications of neural networks continue to expand. As architectures evolve and become more sophisticated, we can expect even more groundbreaking advancements in various fields.

Challenges and Considerations: Navigating the Road Ahead

The journey of neural networks is not without its challenges. Here are some key considerations for the future:

- Explainability: Complex neural networks can be like black boxes, making it difficult to understand how they arrive at their decisions. This lack of explainability can raise concerns about bias and fairness in AI systems. Research in explainable AI (XAI) techniques is crucial to ensure transparency and trust in AI.

- Computational Resources: Training large neural networks often requires significant computational resources and power. Advancements in hardware like neuromorphic computing chips and efficient training algorithms are needed to make AI more accessible and scalable.

- Data Bias: Neural networks are susceptible to biases present in the data they are trained on. Mitigating bias requires careful data selection, techniques to debias training data, and ongoing efforts to promote diversity and inclusion in AI development teams.

Addressing these challenges is crucial for the responsible development and deployment of AI. By fostering collaboration between researchers, developers, and policymakers, we can ensure that neural networks continue to empower progress while adhering to ethical principles.

No comments yet