Foundation models have emerged as revolutionary advancements in the field of artificial intelligence, paving the way for unprecedented capabilities in understanding and generating human-like text. These models, like GPT-3.5 (Generative Pre-trained Transformer 3.5), are at the forefront of natural language processing, transforming the way we interact with and utilize vast amounts of textual data. In this article, we'll delve into what foundation models are, how they work, their applications, and the implications they hold for the future.

Understanding Foundation Models

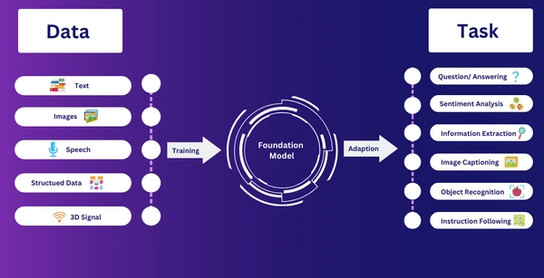

Foundation models are massive neural network-based architectures designed to process and generate human-like text. They are pre-trained on a substantial corpus of text data from the internet, allowing them to learn the intricacies of language, grammar, context, and patterns. This initial pre-training phase equips the model with a foundational understanding of human language, making it capable of generating text that often appears strikingly human-like.

The architecture of a foundation model, such as GPT-3.5, is built on the Transformer architecture. This architecture includes multiple layers of self-attention mechanisms, enabling the model to weigh the importance of different words in a given context, thus understanding and generating coherent sentences and paragraphs.

Evolution of Foundation Models

Foundation models have evolved significantly since their inception. Beginning with models like GPT-1, which had 117 million parameters, subsequent iterations like GPT-3.5 have witnessed a colossal increase in scale, boasting an astonishing 175 billion parameters. This increase in scale has directly contributed to their enhanced performance, enabling them to generate more accurate and contextually relevant responses.

Significance of Foundation Models

Foundation models are monumental in the field of AI for several reasons. They have significantly improved natural language understanding and generation, enabling computers to process and generate human-like text at an unprecedented level of complexity and coherence. This advancement has far-reaching implications for various sectors, from healthcare to marketing, where effective communication is paramount.

These models serve as the base or foundation for a multitude of applications. The ability to pretrain models on massive amounts of data allows them to learn language patterns and general knowledge, while fine-tuning enables tailoring their capabilities for specific tasks. The sheer scale and versatility of these models make them a cornerstone of contemporary AI research and applications.

Architecture: The Transformer Model

At the heart of most foundation models lies the transformer architecture. The transformer model is renowned for its efficiency in handling sequential data, making it ideal for processing and generating text. Its attention mechanism allows the model to weigh different parts of the input text differently, capturing dependencies and relationships effectively.

The transformer consists of an encoder-decoder structure, with multiple layers of self-attention mechanisms. The encoder processes the input text, while the decoder generates the output, typically conditioned on the input. This architecture, scalable to accommodate a vast number of parameters, forms the foundation of models like GPT-3.5.

Training Process: Pretraining and Fine-Tuning

The training of foundation models involves two key steps: pretraining and fine-tuning.

1. Pretraining

In this initial phase, the model is trained on a massive corpus of text data, such as parts of the internet. The objective is for the model to predict the next word in a sequence, which encourages it to learn grammar, context, and world knowledge.

2. Fine-Tuning

After pretraining, the model is further trained on specific data relevant to a particular task. This data can be labeled and narrowed down to the domain or application of interest. Fine-tuning allows the model to adapt and specialize its knowledge and skills.

The combination of these steps equips the model to handle a wide array of tasks, making foundation models versatile and powerful.

Applications of Foundation Models

The applications of foundation models are extensive and diverse, spanning various domains:

1. Text Generation

Foundation models excel at generating human-like text, making them invaluable for tasks like content creation, storytelling, and creative writing.

2. Language Translation

These models can be fine-tuned to provide high-quality language translation services, breaking down language barriers and fostering global communication.

3. Summarization

Foundation models can generate concise summaries of lengthy texts, facilitating quicker comprehension of large documents.

4. Chatbots and Conversational AI

They power intelligent chatbots that can engage in natural and contextually relevant conversations, enhancing customer service and user interaction.

5. Information Extraction

Foundation models assist in extracting relevant information from unstructured data, aiding in data analysis and information retrieval.

Future Trends and Possibilities

The trajectory of foundation models is exciting, with researchers continually pushing the boundaries. Future models are expected to be more efficient, interpretable, and capable of understanding and generating even more nuanced and contextually relevant text. Furthermore, addressing ethical concerns and ensuring the inclusivity and fairness of these models will be at the forefront of future developments.

In conclusion, foundation models represent a groundbreaking milestone in AI and NLP. Their immense potential is being realized across diverse domains, promising a future where AI augments human capabilities and enhances various aspects of our lives. As researchers and practitioners collaborate to shape the evolution of foundation models, we stand on the cusp of an AI-powered era, poised to unlock opportunities that were once unimaginable.

Conclusion

Foundation models represent a paradigm shift in AI, transcending language understanding and generation to empower various sectors. Their scale, efficiency, and adaptability make them a crucial asset for AI developers, researchers, and practitioners. As we embrace responsible AI practices and navigate ethical considerations, we can look forward to a future where foundation models drive innovation and augment human capabilities, transforming the world we live in.

In this journey, collaboration, transparency, and a commitment to ethical use will be essential to unlock the true potential of foundation models, propelling us into an era where artificial intelligence reshapes our understanding of what's possible.

No comments yet