As humans, we generally spend our lives observing our surroundings using optic nerves, retinas, and the visual cortex. We gain context to differentiate between objects, gauge their distance from us and other objects, calculate their movement speed, and spot mistakes. Similarly, computer vision enables AI-powered machines to train themselves to carry out these very processes. These machines use a combination of cameras, algorithms, and data to do so. Today, computer vision is one of the hottest subfields of artificial intelligence and machine learning, given its wide variety of applications and tremendous potential. Its goal is to replicate the powerful capacities of human vision.

Computer vision needs a large database to be truly effective. This is because these solutions analyze information repeatedly until they gain every possible insight required for their assigned task. For instance, a computer trained to recognize healthy crops would need to ‘see’ thousands of visual reference inputs of crops, farmland, animals, and other related objects. Only then would it effectively recognize different types of healthy crops, differentiate them from unhealthy crops, gauge farmland quality, detect pests and other animals among the crops, and so on.

How Does Computer Vision Work?

Computer Vision primarily relies on pattern recognition techniques to self-train and understand visual data. The wide availability of data and the willingness of companies to share them has made it possible for deep learning experts to use this data to make the process more accurate and fast.

Generally, computer vision works in three basic steps:

1: Acquiring the image Images, even large sets, can be acquired in real-time through video, photos, or 3D technology for analysis.

2: Processing and annotating the image The models are trained by first being fed thousands of labeled or pre-identified images. The collected data is cleaned according to the use case and the labeling is performed.

3: Understanding the image The final step is the interpretative step, where an object is identified or classified.

What is training data?

Training data is a set of samples such as videos and images with assigned labels or tags. It is used to train a computer vision algorithm or model to perform the desired function or make correct predictions. Training data goes by several other names, including learning set, training set, or training data set. It is used to train the machine learning model to get desired output. The model also scrutinizes the dataset repetitively to understand its traits and fine-tune itself for optimal performance.

In the same way, human beings learn better from examples; computers also need them to begin noticing patterns and relationships in the data. But unlike human beings, computers require plenty of examples as they don’t think as humans do. In fact, they don’t see objects or people in the images. They need plenty of work and huge datasets for training a model to recognize different sentiments from videos. Thus a huge amount of data needs to be collected for training

Types of training data

Images, videos, and sensor data are commonly used to train machine learning models for computer vision. The types of training data used include:

2D images and videos: These datasets can be sourced from scanners, cameras, or other imaging technologies.

3D images and videos: They’re also sourced from scanners, cameras, or other imaging technologies.

Sensor data: It’s captured using remote technology such as satellites.

Training Data Preparation

If you plan to use a deep learning model for classification or object detection, you will likely need to collect data to train your model. Many deep learning models are available pre-trained to detect or classify a multitude of common daily objects such as cars, people, bicycles, etc. If your scenario focuses on one of these common objects, then you may be able to simply download and deploy a pre-trained model for your scenario. Otherwise, you will need to collect and label data to train your model.

Data Collection

Data collection is the process of gathering relevant data and arranging it to create data sets for machine learning. The type of data (video sequences, frames, photos, patterns, etc.) depends on the problem that the AI model aims to solve. In computer vision, robotics, and video analytics, AI models are trained on image datasets with the goal of making predictions related to image classification, object detection, image segmentation, and more. Therefore, the image or video data sets should contain meaningful information that can be used to train the model for recognizing various patterns and making recommendations based on the same.

The characteristic situations need to be captured to provide the ground truth for the ML model to learn from. For example, in industrial automation, image data needs to collected that contains specific part defects. Therefore a camera needs to gather footage from assembly lines to provide video or photo images that can be used to create a dataset.

The data collection process is crucial for developing an efficient ML model. The quality and quantity of your dataset directly affect the AI model’s decision-making process. And these two factors determine the robustness, accuracy, and performance of the AI algorithms. As a result, collecting and structuring data is often more time-consuming than training the model on the data.

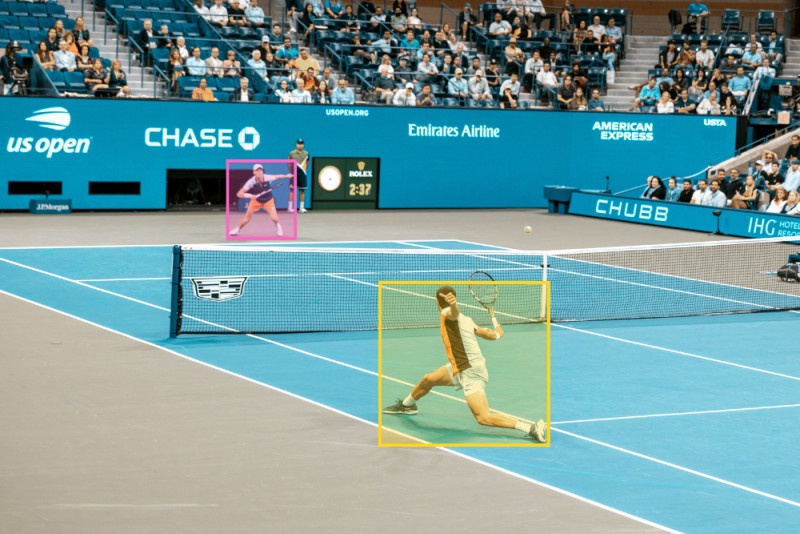

Data annotation

The data collection is followed by Data annotation, the process of manually providing information about the ground truth within the data. In simple words, image annotation is the process of visually indicating the location and type of objects that the AI model should learn to detect. For example, to train a deep learning model for detecting cats, image annotation would require humans to draw boxes around all the cats present in every image or video frame. In this case, the bounding boxes would be linked to the label named “cat.” The trained model will be able to detect the presence of cats in new images.

Once you have a good set of images collected you will need to label the images. Several tools exist to facilitate the labeling process. These include open-source tools such as labelImg and commercial tools such as Azure Machine Learning, which support image classification and object detection labeling. For large labeling projects, it is recommended to select a labeling tool that supports workflow management and quality reviews. These features are essential to ensure quality and efficiency in the labeling process. Labeling is a very tedious job. So companies prefer to outsource this to third-party labeling vendors like Tagx who take care of this whole labeling process.

What are the labels?

Labels are what the human-in-the-loop uses to identify and call out features that are present in the data. It’s critical to choose informative, discriminating, and independent features to label if you want to develop high-performing algorithms in pattern recognition, classification, and regression. Accurately labeled data can provide ground truth for testing and iterating your models.

Label Types of Computer Vision Data Annotation

Currently, most computer vision applications use a form of supervised machine learning, which means we need to label datasets to train the applications.

Choosing the correct label type for an application depends on what the computer vision model needs to learn. Below are four common types of computer vision models and annotations.

2D Bounding Boxes

Bounding boxes are one of the most commonly relied-on techniques for computer vision image annotation. It’s simple all the annotator has to do is draw a box around the target object. For a self-driving car, target objects would include pedestrians, road signs, and other vehicles on the road. Data scientists choose bounding boxes when the shape of target objects is less of an issue. One popular use case is recognizing groceries in an automated checkout process.

3D Bounding Boxes

Not all bounding boxes are 2D. Their 3D cousins are called cuboids. Cuboids create object representations with depth, allowing computer vision algorithms to perceive volume and orientation. For annotators, drawing cuboids means placing and connecting anchor points. Depth perception is critical for locomotive robots. Understanding where to place items on shelves involves an understanding of more than just height and width.

Landmark Annotation

Landmark annotation is also called dot/point annotation. Both names fit the process: placing dots or landmarks across an image, and plotting key characteristics such as facial features and expressions. Larger dots are sometimes used to indicate more important areas.

Skeletal or pose-point landmark annotations reveal body position and alignment. These are commonly used in sports analytics. For example, skeletal annotations can show where a basketball player’s fingers, wrist, and elbow are in relation to each other during a slam dunk.

Polygons

Polygon segmentation introduces a higher level of precision for image annotations. Annotators mark the edges of objects by placing dots and drawing lines. Hugging the outline of an object cuts out the noise that other image annotation techniques would include. Shearing away unnecessary pixels becomes critical when it comes to irregularly shaped objects, such as bodies of water or areas of land captured by autonomous satellites or drones.

Final thoughts

Training data is the lifeblood of your computer vision algorithm or model. Without relevant, labeled data, everything is rendered useless. The quality of the training data is also an important factor that you should consider when training your model. The work of the training data is not just to train the algorithms to perform predictive functions as accurately as possible. It is also used to retrain or update your model, even after deployment. This is because real-world situations change often. So your original training dataset needs to be continually updated.

If you need any help, contact us to speak with an expert at TagX. From Data Collection, and data curation to quality data labeling, we have helped many clients to build and deploy AI solutions in their businesses.

Comments (1)